Microsoft earns top spots in Gartner’s 2025 Magic Quadrants for Data Science and Machine Learning Platforms, AI Application Development Platforms, and Data Integration Tools, spotlighting Fabric and Foundry as game-changers for unified data and intelligent apps.

These recognitions validate how Fabric builds governed data foundations while Foundry orchestrates production AI agents, delivering real ROI across industries.

Gartner Recognition Highlights Strategic Strength

Gartner positions Microsoft furthest for vision and execution in AI app development, crediting Foundry’s secure grounding to enterprise data via over 1400 connectors.

In Data Science and ML, Azure Machine Learning atop Foundry unifies Fabric, Purview, and agent services for full AI lifecycles from prototyping to scale.

Fabric leads data integration with OneLake’s SaaS model, powering 28,000 customers and 60% YoY growth for real-time analytics and AI readiness.

How Fabric and Foundry Work Together

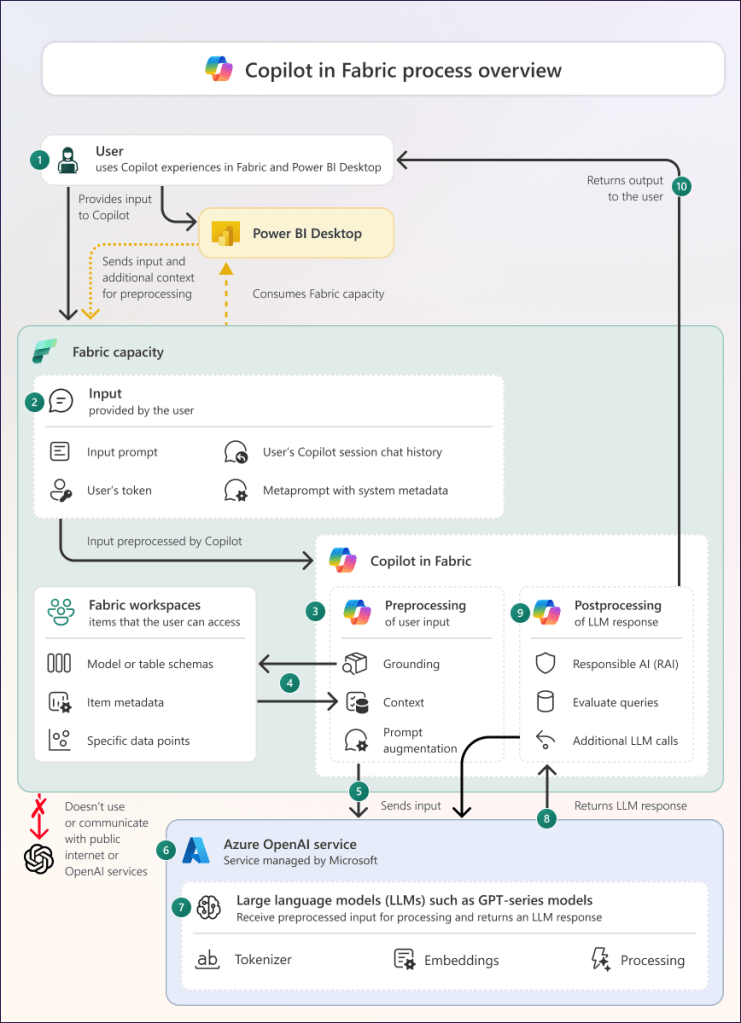

Fabric centralizes lakehouses, warehouses, pipelines, and Power BI in OneLake for governed, multi-modal data. Foundry agents connect via Fabric Data Agents, querying SQL, KQL, or DAX securely with passthrough auth.

This duo grounds AI in real data—agents forecast from streams, summarize warehouses, or visualize lakehouses without hallucinations or custom code.

Developers prototype locally with Semantic Kernel or AutoGen, then deploy to Foundry for orchestration, observability, and MLOps via Azure ML fine-tuning.

Case Study: James Hall Boosts Profitability with Fabric

UK wholesaler James Hall mirrors half a billion rows across 50 tables in Fabric, serving 30+ reports to 400 users for sales, stock, and wastage insights.

Fabric’s Real-Time Intelligence processes high-granularity streams instantly, driving efficiency and profitability through unified dashboards—no more silos.

Adding Foundry, they could extend to agents asking “Predict stock shortages by store” via Data Agents, blending Fabric analytics with AI reasoning for proactive orders.

Another Example: Retail Forecasting with Unified Intelligence

A global retailer ingests POS, inventory, and weather data into Fabric pipelines. Real-Time Intelligence detects demand spikes; lakehouses run Spark ML for baselines.

Foundry agents query these via endpoints: “Forecast Black Friday sales by category, factoring promotions.” Multi-step orchestration pulls Fabric outputs, applies reasoning, and embeds results in Teams copilots.

This cuts forecasting time from days to minutes, with 25-40% accuracy gains over legacy tools, per similar deployments.

Capabilities That Set Them Apart

Fabric’s SaaS spans ingestion to visualization on OneLake, with Copilot accelerating notebooks and pipelines 50% faster.

Foundry adds agentic AI: Foundry IQ grounds responses in Fabric data; Tools handle docs, speech, and 365 integration; fine-tuning via RFT adapts models dynamically.

Security shines—RBAC, audits, Purview lineage, and data residency ensure compliance for finance, healthcare, or regulated ops.

Gartner notes this ecosystem’s interoperability with GitHub, VS Code, and Azure Arc for hybrid/edge, powering IIoT leaders too.

Business Impact and ROI Metrics

Customers report 35-60% dev time savings, 25% better predictions, and seamless scaling from PoC to production.

James Hall gained profitability insights across sites; insurers cut claims 25% via predictive agents.

For data leaders, start with Fabric pilots on high-volume workloads, add Foundry Data Agents for top queries, then scale agents org-wide.

Path Forward for Enterprises

Leverage these Leaders by auditing data estates against Gartner’s criteria—unify in Fabric, agent-ify in Foundry. Pilot with sales or ops use cases for quick wins.

As Gartner evolves, Microsoft’s roadmap promises deeper agentic AI, global fine-tuning, and adaptive cloud integration.

This stack turns data into decisions at enterprise scale—proven by analysts and adopters alike.

#MicrosoftFabric #AzureAIFoundry #GartnerMagicQuadrant #DataAI