Migrating SAP systems to Azure, Microsoft fine-tuned it’s capacity management processes, minimizing downtime, risk, and costs and improving employee efficiencies. Optimizing on Azure allows us to design an SAP environment that is agile, efficient, and flexible to grow and change with our business. need (least-privileged).

As we decided to migrate your SAP systems to Azure. It’s a big move, and taking the right steps can make the transition smooth and manageable. IoTCoast2Coast took a measured approach to moving most sensitive data and confidential workloads with SAP systems.

The right approach makes it possible to migrate mission-critical SAP systems to Azure, gaining maximum cost savings, scalability, and agility, without disrupting business operations. Our horizontal strategy meant moving low-risk environments like our sandboxes first, giving us experience with Azure migration without risking critical business functions in the process. Using a vertical strategy to move entire low-impact systems gave us experience with Azure production processes.

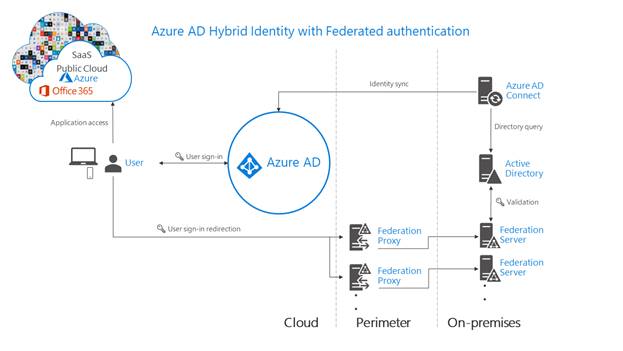

To configure Azure AD integration with SAP Cloud Platform, you need the following items:

- Azure Subscription

- Basic Azure knowledge

- An Azure AD tenant

- SAP Cloud Platform Identity Authentication tenant

- A user account in SAP Cloud Platform Identity Authentication with Admin permissions.

- An Azure AD subscription. If you don’t have an Azure AD environment, you can get one-month trial here

- SAP Cloud Platform single sign-on enabled subscription

Throughout the document, these terms are used:

IaaS: Infrastructure as a service.

PaaS: Platform as a service.

SaaS: Software as a service.

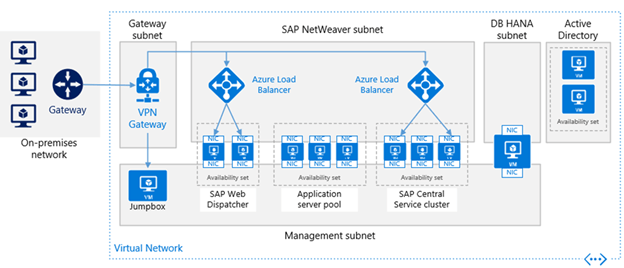

Azure is the preferred platform for SAP. As the top SAP certified cloud provider, Azure able to reliably run mission critical SAP environment on a trusted cloud platform built for enterprises. Azure meets scalability, flexibility, and compliance needs.

Azure can run the most complete set of SAP applications across dev-test and production scenarios in Azure—and be fully supported. Azure is certified for more SAP solutions than any other cloud provider, including solutions like SAP HANA and S/4 HANA, SAP Business Suite, SAP NetWeaver, and SAP Business One to name a few.

Azure also carries a large number of benefits when hosting the SAP platform, including:

The distributed nature of our business process environment led us to examine a broader solution—one that would provide comprehensive telemetry and monitoring for our SAP landscape, but also for any other business processes that comprised the end-to-end business landscape at Microsoft. Our implementation was driven by the following important goals:

Microsoft developed a telemetry platform in Azure called as the Unified Telemetry Platform (UTP). UTP is a modern, scalable, reliable, and cost-effective telemetry platform that’s used in several different business process monitoring scenarios in Microsoft, including our SAP-related business processes.

UTP is built to enable service maturity and business process monitoring across CSEO. It provides a common telemetry taxonomy and integration with core Microsoft data monitoring services. UTP enables compliance and the maintenance of business standards for data integrity and privacy. While UTP is the implementation we chose, there are numerous ways to enable telemetry on Azure.

Capturing telemetry with Azure Monitor

To enable business-driven monitoring and a user-centric approach, UTP captures as many of the critical events within the end-to-end process landscape as possible. Embracing comprehensive telemetry in our systems meant capturing data from all available endpoints to build an understanding of how each process flowed and which of the SAP components were involved. Azure Monitor and its related Azure services serve as the core for our solution.

Azure Application Insights

Application Insights provides an Azure-based solution with which we can dig deep into our Azure-hosted SAP landscape and pull out all necessary telemetry data. Using Application insights, we can automatically generate alerts and support tickets when our telemetry indicates a potential error situation.

Azure Log Analytics

Infrastructure telemetry such as CPU usage, disk throughput and other performance-related data is collected from Azure infrastructure components in the SAP environment using Log Analytics.

Azure Data Explorer

UTP uses Azure Data Explorer as the central repository for all telemetry data sent through Application Insights and Azure Monitor Logs from our application and infrastructure environment. Azure Data Explorer provides enterprise big data interactive analytics; we use the Kusto query language to stitch together the end-to-end transaction flow for our business processes, for both SAP process and non-SAP processes.

Azure Data Lake

UTP uses Azure Data Lake for long-term cold data storage. This data is taken out of the hot and warm streams and kept for reporting and archival purposes in Azure Data Lake to reduce the cost associated with storing large amounts of data in Azure Monitor.

The first step in enabling our telemetry platform was to create a reusable custom method and configuration table to drive consistent creation of the telemetry payloads. The configuration table defines the fixed structure of the payload according to the UTP standards.

The method then allows the calling application to pass an application-specific payload to populate the dynamic properties section of the telemetry events payload, and then adds SAP standard elements such as the event date and time, and system identifier. This method can then be called directly from any ABAP code, in either synchronous or asynchronous modes.

For example, in most business processes in our ERP, we use SAP business process events to trigger our telemetry events. The business process events share a custom check routine framework built using SAP Business Rule Framework plus; then custom receiver classes build the dynamic properties of the payload and call the shared telemetry class.

When each event in the workflow is processed in SAP, the JSON payload is passed to Application Insights using an external REST service call, which connects to the UTP framework. The following figure contains an example from our non-delivery order-to-cash process.

Azure Monitor maximizes the availability and performance of your applications and services by delivering a comprehensive solution for collecting, analyzing, and acting on telemetry from your cloud and on-premises environments.

Azure Monitor include:

The below diagram gives a high-level view of Azure Monitor. At the center of the diagram are the data stores for metrics and logs, which are the two fundamental types of data use by Azure Monitor.

On the left are the sources of monitoring data that populate these data stores. On the right are the different functions that Azure Monitor performs with this collected data such as analysis, alerting, and streaming to external systems.

All data collected by Azure Monitor fits into one of two fundamental types, metrics and logs. Metrics are numerical values that describe some aspect of a system at a particular point in time. They are lightweight and capable of supporting near real-time scenarios. Logs contain different kinds of data organized into records with different sets of properties for each type. Telemetry such as events and traces are stored as logs in addition to performance data so that it can all be combined for analysis.

Log data collected by Azure Monitor can be analyzed with queries to quickly retrieve, consolidate, and analyze collected data. You can create and test queries using Log Analytics in the Azure portal and then either directly analyze the data using these tools or save queries for use with visualizations or alert rules.

Azure Monitor uses a version of the Kusto query language used by Azure Data Explorer that is suitable for simple log queries but also includes advanced functionality such as aggregations, joins, and smart analytics. You can quickly learn the query language using multiple lessons.

Data collected by Azure Monitor ?

Azure Monitor can collect data from a variety of sources. You can think of monitoring data for your applications in tiers ranging from your application, any operating system and services it relies on, down to the platform itself. Azure Monitor collects data from each of the following tiers:

Azure Monitoring data is only useful if it can increase your visibility into the operation of your computing environment. Azure Monitor includes several features and tools that provide valuable insights into your applications and other resources that they depend on. Monitoring solutions and features such as Application Insights and Azure Monitor for containers provide deep insights into different aspects of your application and specific Azure services.

Application Insights

Application Insights monitors the availability, performance, and usage of your web applications whether they’re hosted in the cloud or on-premises. It leverages the powerful data analysis platform in Azure Monitor to provide you with deep insights into your application’s operations and diagnose errors without waiting for a user to report them. Application Insights includes connection points to a variety of development tools and integrates with Visual Studio to support your DevOps processes.

Azure Lighthouse offers service providers a single control plane to view and manage Azure across all their customers with higher automation, scale, and enhanced governance. With Azure Lighthouse, service providers can deliver managed services using comprehensive and robust management tooling built into the Azure platform. This offering can also benefit enterprise IT organizations managing resources across multiple tenants.

Benefits

Azure Lighthouse helps you to profitably and efficiently build and deliver managed services for your customers. The benefits include:

- Management at scale: Customer engagement and life-cycle operations to manage customer resources are easier and more scalable.

- Greater visibility and precision for customers: Customers whose resources you’re managing will have greater visibility into your actions and precise control over the scope they delegate for management, while your IP is preserved.

- Comprehensive and unified platform tooling: Our tooling experience addresses key service provider scenarios, including multiple licensing models such as EA, CSP and pay-as-you-go. The new capabilities work with existing tools and APIs, licensing models, and partner programs such as the Cloud Solution Provider program (CSP). The Azure Lighthouse options you choose can be integrated into your existing workflows and applications, and you can track your impact on customer engagements by linking your partner ID.

There are no additional costs associated with using Azure Lighthouse to manage your customers’ Azure resources.

Capabilities

Azure Lighthouse includes multiple ways to help streamline customer engagement and management:

- Azure delegated resource management: Manage your customers’ Azure resources securely from within your own tenant, without having to switch context and control planes. For more info, see Azure delegated resource management.

- New Azure portal experiences: View cross-tenant info in the new My customers page in the Azure portal. A corresponding Service providers blade lets your customers view and manage service provider access. For more info, see View and manage customers and View and manage service providers.

- Azure Resource Manager templates: Perform management tasks more easily, including onboarding customers for Azure delegated resource management. For more info, see our samples repo and Onboard a customer to Azure delegated resource management.

- Managed Services offers in Azure Marketplace: Offer your services to customers through private or public offers, and have them automatically onboarded to Azure delegated resource management, as an alternate to onboarding using Azure Resource Manager templates. For more info, see Managed services offers in Azure Marketplace.

- Azure managed applications: Package and ship applications that are easy for your customers to deploy and use in their own subscriptions. The application is deployed into a resource group that you access from your tenant, letting you manage the service as part of the overall Azure Lighthouse experience. For more info, see Azure managed applications overview.

Azure Monitor stores log data in a Log Analytics workspace, which is an Azure resource and a container where data is collected, aggregated, and serves as an administrative boundary. While you can deploy one or more workspaces in your Azure subscription, there are several considerations you should understand in order to ensure your initial deployment is following our guidelines to provide you with a cost effective, manageable, and scalable deployment meeting your organizations needs.

Data in a workspace is organized into tables, each of which stores different kinds of data and has its own unique set of properties based on the resource generating the data. Most data sources will write to their own tables in a Log Analytics workspace.

A Log Analytics workspace provides:

- A geographic location for data storage.

- Data isolation by granting different users access rights following one of our recommended design strategies.

- Scope for configuration of settings like pricing tier, retention, and data capping.

As discussed overview of the design and migration considerations, access control overview, and an understanding of the design implementations recommended for your IT enterprise.

We learned several important lessons with our UTP implementation for SAP on Azure. These lessons helped inform our progress of UTP development, and they’ve given us best practices to leverage in future projects, including:

- Perform a proper inventory of internal processes. You must be aware of events within a process before you can capture them. Performing a complete and informed inventory of your business processes is critical to capturing the data required for end-to-end business-process monitoring.

- Build for true end-to-end telemetry. Capture all events from all processes and gather telemetry appropriately. Data points from all parts of the business process—including external components—are critical to achieving true end-to-end telemetry.

- Build for Azure-native SAP. SAP on Azure is easier, and instrumenting SAP processes becomes more efficient and effective when SAP components are built for Azure.

- Encourage data-usage models and standards across the organization. Data standards are critical for an accurate end-to-end view. If data is stored in different formats or instrumentation in various parts of the business process, the end reporting results won’t accurately represent the state of the business process.

Microsoft/ Azure continually refining and improving business-process monitoring of SAP on Azure with UTP. It has enabled enterprise to keep key business users informed of business process flow, provided a complete view of business process health to leadership, and helped our engineering teams create a more robust and efficient SAP environment. Telemetry and business-driven monitoring with UTP have transformed the visibility we have into our SAP on Azure environment, and continuing journey toward deeper business insight and intelligence is making entire business better.